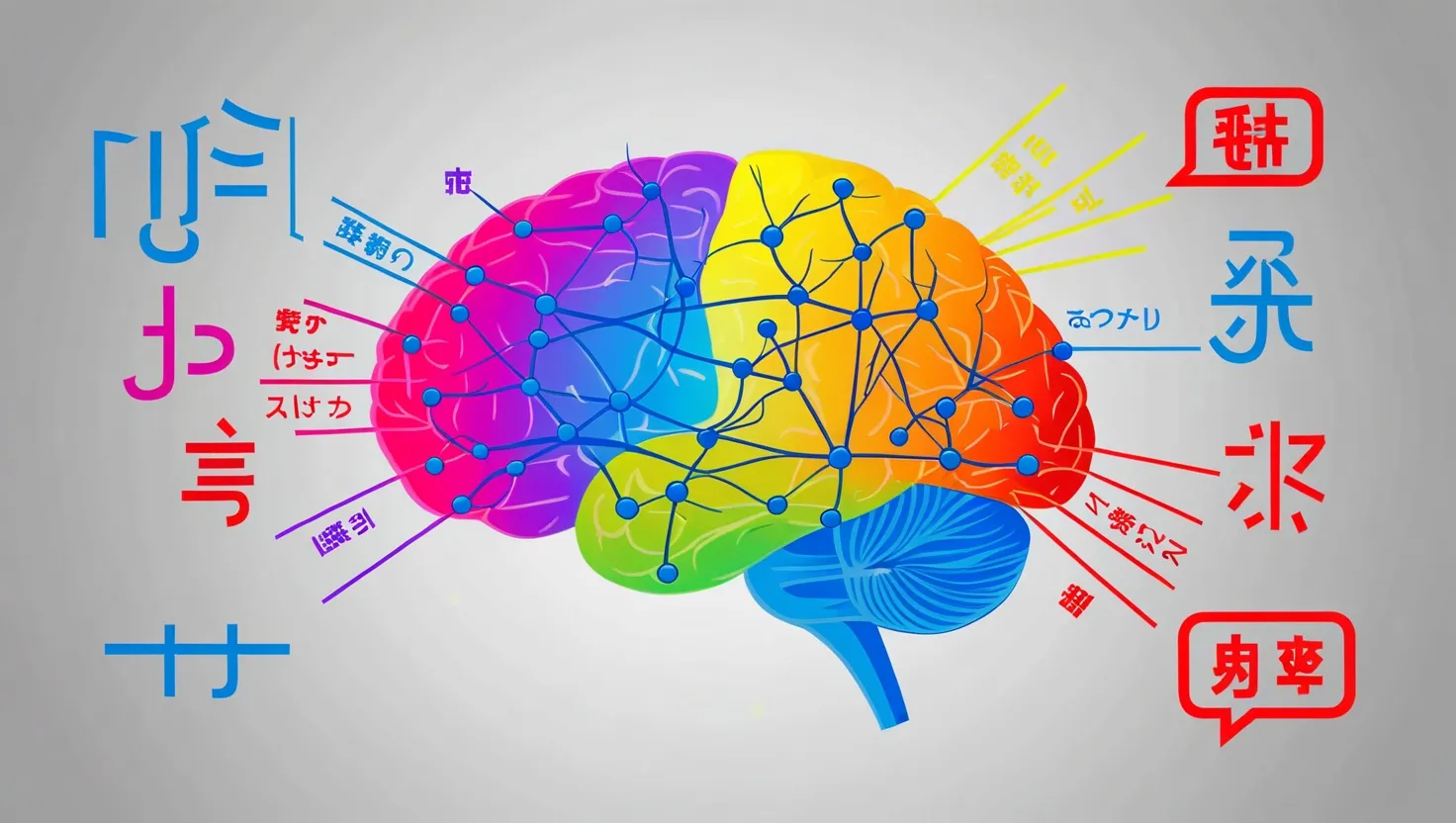

At its core, an artificial neural network is essentially a complex mathematical equation. Similar to how neurons function in the human brain, artificial neurons are interconnected to form a network that can be trained to perform specific tasks.

The fundamental basis of an artificial neuron can be traced back to a simple elementary school equation. In this equation, ‘X’ represents the input, ‘W’ is the weight, ‘B’ is a bias term, and ‘Z(X)’ is the resulting output. The concept is straightforward—input ‘X’ is modified by multiplying it with a weight ‘W’, and then a bias ‘B’ is added to produce the output ‘Z(X)‘.

This equation allows the artificial intelligence system to map an input value to a desired output value. The weights ‘W’ and bias ‘B’ are not arbitrarily chosen; they are determined through a process called training. During training, the AI system adjusts these parameters so the input is transformed correctly into the output.

In essence, although a powerful neural network might appear incredibly complex, it is fundamentally just mathematics. By connecting and training these artificial neurons, we enable AI systems to perform a wide variety of tasks efficiently.