Think about all the ways we use computers today—shopping on your smartphone, gaming on your PC, or even just watching videos on your laptop. All these activities are powered by what is essentially a more complex form of a simple light switch. The brain of your computer, the CPU or Central Processing Unit, contains billions of tiny components called transistors, each acting like a tiny light switch that can be either on or off.

When a transistor is on, it represents a “1”, and when off, it represents a “0”. This binary language of ones and zeros is the most basic language a computer understands. It’s fascinating how these simple binary commands can lead to the complex, sophisticated devices we rely on.

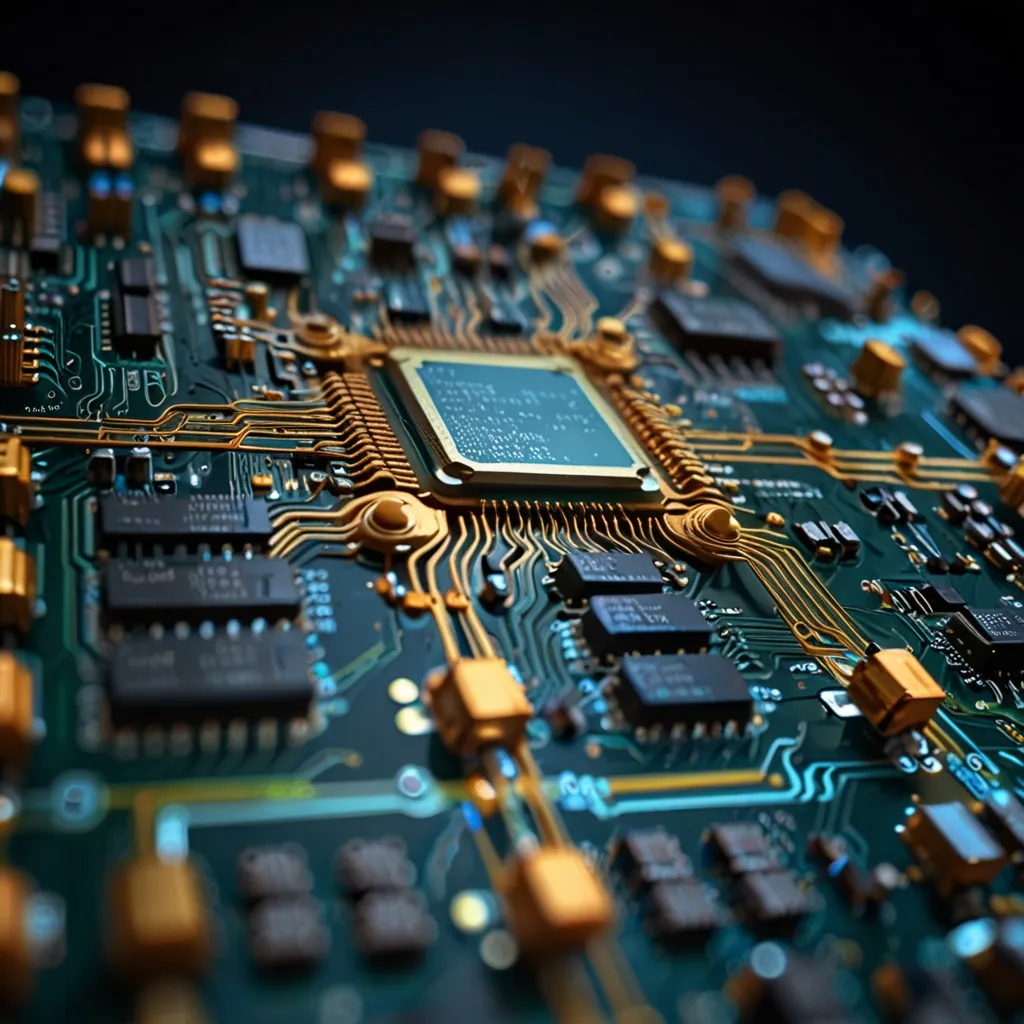

To understand this better, we need to peek inside the CPU. Classical CPUs are built from transistors forming logic gates, which perform the actual computations. The Intel 4004, introduced in 1971, was the first commercially available general-purpose processor. It had just 2,250 transistors and was a 4-bit processor, capable of handling data in 4-bit chunks represented by ones and zeros. Fast forward to today, modern processors are 64-bit monsters with billions of transistors.

Despite their advanced capabilities, modern CPUs still rely on simple principles. They use various other components for data management and storage, but the core processing happens in the Arithmetic Logic Unit (ALU), which executes mathematical calculations and logical operations.

A CPU adds two numbers by converting them into binary, directing them through logic gates like AND, OR, and XOR gates. These gates are built from transistors arranged to perform specific operations. For instance, in a full adder circuit, multiple gates work together to add binary numbers, taking into account any carry-over values, much like elementary addition.

Things get really interesting when we move into the realm of quantum computing. Quantum computers use qubits instead of bits. A qubit can be both 0 and 1 simultaneously, thanks to a phenomenon known as superposition. This allows quantum processors to handle more complex computations more efficiently than classical processors.

Quantum logic gates, like the Toffoli gate and CNOT gates, replace classical AND, OR, and XOR gates. A quantum computer can perform multiple calculations simultaneously due to its ability to handle qubits. However, these machines excel at certain types of problems, like optimization and complex search tasks, rather than replacing classical computers for everyday tasks.

Quantum computing might sound like a futuristic dream, but it’s rapidly becoming a reality, with potential to revolutionize many fields. Classical computing has paved the way, but the future is looking quantum.